Still a little tweaking to do on heat control but not real bad. First batch of WUs is running out at 275,000 PPD

Join the SatelliteGuys Folding@Home Team!

- Thread starter Scott Greczkowski

- Start date

- Latest activity Latest activity:

- Replies 5K

- Views 395K

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

In two hours I will complete my 100th WU and with that unit I hit the 1M point mark too

Congratulations to king3pj for passing 6,000,000 Points! (okay, maybe a little early...)

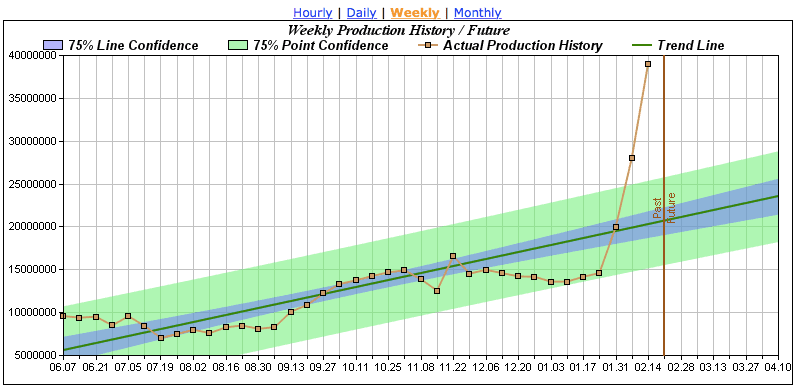

We are on track to pass 100,000,000 Points in a month. Awesome work! I like the Weekly trend:

Up, Up, and Away!

We are on track to pass 100,000,000 Points in a month. Awesome work! I like the Weekly trend:

Up, Up, and Away!

Last edited:

Well, the T7400 did not like working with the 980 and 750ti. It keeps rebooting. I need to review the error logs to try and find out what is causing the problem. In the mean time, I have removed the 750ti and will put it in another machine.

A side exhaust fan down by the two video cards would probably take care of any heat issues. Nice, clean looking rig.Still a little tweaking to do on heat control but not real bad. First batch of WUs is running out at 275,000 PPD

In two hours I will complete my 100th WU and with that unit I hit the 1M point mark too

Congrats!!!!!!

Well, the T7400 did not like working with the 980 and 750ti. It keeps rebooting. I need to review the error logs to try and find out what is causing the problem. In the mean time, I have removed the 750ti and will put it in another machine.

It could be a heat issue. I would run HWInfo while you fold for a while and check on the temperatures. This is free software that will give you maximum, average, and current statistics for all of your hardware. You are going to want to keep an eye on the the CPU and GPU max and average temperatures.You will need to check this before it crashes to see the data.

With two CPUs and two GPUs you do have a lot of things kicking heat into the case. It is possible that either the CPUs or the GPUs are triggering the reboots due to overheating. Your GPU should be able to reach 98C before shutting down to protect itself but you obviously wouldn't want to run it nearly that hot on a regular basis. CPU shutdown temperature varies by model and I'm not sure what the numbers are for your particular CPU. I don't think anything below 80C would trigger shutdown on most CPUs though. The real number is probably quite a bit higher than that.

I think it is most likely a power supply issue. Even if it came with a 1000W unit the Dell OEM PSUs aren't known for being the highest quality. This is why most people at Tom's Hardware recommend replacing them when converting a Dell PC to a gaming PC even if the rated wattage is high enough. These are typically standard home PCs though. A Dell server grade machine might ship with higher quality hardware.

Plus it's an older machine and power supplies are known to degrade over time, especially if they weren't a high quality model to begin with.

And if you are shopping power supplies look for a 80 Plus (platinum preferred) The electricity and heat you save will pay for itself in a folding rig.

I use MSI Afterburner to monitor the video cards. The 980 was running at 68C and the 750 at 60C when the last reboot occurred. I also set a fan profile for each video card to keep them cool. The 750 is bus powered and does not need additional power. The T7400 has been rock steady otherwise with just the 980 in it.

I use MSI Afterburner to monitor the video cards. The 980 was running at 68C and the 750 at 60C when the last reboot occurred. I also set a fan profile for each video card to keep them cool. The 750 is bus powered and does not need additional power. The T7400 has been rock steady otherwise with just the 980 in it.

That proves that the GPU temps aren't the problem but it hasn't cleared the CPUs. Like I said in my last post, I think it's more likely the power supply than a temperature problem but it's worth checking on your CPU temps before spending money since that can be done for free.

Try this.

Install just the 750Ti and un-install any graphics drivers and go to the website and in selecting the driver, tell it you have a 750Ti.

Download and install. Re-boot see how it runs for a few hours.

Then put the 980 back in, leaving drivers as is. See what that does.

I have a 970 and a 770. Mine was not rebooting but just acting real sluggish, like it was a computer from the 90's.

Swapped cards around, tried this, tried that...then I decided to just put in one card at a time and see what happened.

That is when I discovered it would not fold with the 900 series drivers with the 770.

Did what I said above and now it is humming along.

Install just the 750Ti and un-install any graphics drivers and go to the website and in selecting the driver, tell it you have a 750Ti.

Download and install. Re-boot see how it runs for a few hours.

Then put the 980 back in, leaving drivers as is. See what that does.

I have a 970 and a 770. Mine was not rebooting but just acting real sluggish, like it was a computer from the 90's.

Swapped cards around, tried this, tried that...then I decided to just put in one card at a time and see what happened.

That is when I discovered it would not fold with the 900 series drivers with the 770.

Did what I said above and now it is humming along.

I will try this when I get the chance. Thanks. I didn't think about the different driver for the 750ti.Try this.

Install just the 750Ti and un-install any graphics drivers and go to the website and in selecting the driver, tell it you have a 750Ti.

Download and install. Re-boot see how it runs for a few hours.

Then put the 980 back in, leaving drivers as is. See what that does.

I have a 970 and a 770. Mine was not rebooting but just acting real sluggish, like it was a computer from the 90's.

Swapped cards around, tried this, tried that...then I decided to just put in one card at a time and see what happened.

That is when I discovered it would not fold with the 900 series drivers with the 770.

Did what I said above and now it is humming along.

I briefly thought about it and then forgot...and assumed they would be the same.I will try this when I get the chance. Thanks. I didn't think about the different driver for the 750ti.

Even EVGA precision X, screamed non-supported video card, until I installed drivers specifically choosing 700 series card.

Don't know if it will help you but worth a shot I guess.

Even though the 750 Ti is bus powered it still uses about 60W. This is almost nothing compared to other GPUs but it is still additional strain.

The other thing that is important when considering power supplies is how many amps are available on the 12V rail. This might be harder to look up specs for on an OEM power supply.

It could be a driver issue but I haven't heard of those causing a PC to reboot before. Sometimes the screen will go black if the display driver crashes but the PC itself doesn't usually shut down.

It's worth trying any fixes you can try for free before spending money on a new power supply. I just know that random reboots under load are very often the result of a power supply problem. They can also be a sign of CPU instability but if you haven't overclocked the CPUs it's rare for that to happen.

The other thing that is important when considering power supplies is how many amps are available on the 12V rail. This might be harder to look up specs for on an OEM power supply.

It could be a driver issue but I haven't heard of those causing a PC to reboot before. Sometimes the screen will go black if the display driver crashes but the PC itself doesn't usually shut down.

It's worth trying any fixes you can try for free before spending money on a new power supply. I just know that random reboots under load are very often the result of a power supply problem. They can also be a sign of CPU instability but if you haven't overclocked the CPUs it's rare for that to happen.

Running a 750 and a 980 together brings up an interesting issue. Can you run different drivers for the two cards? Using an nvidia driver later than 327.23 for the 750 causes its production to drop by about half. I don't know if 327 supports the 980 but the latest drivers do support the 750. I'd still look at the power and cpu temps too.

I have been trying some different configurations and I think I have it all set for now. I am running only three GPU clients on the two computers. I discontinued the CPU client in the PC with the two 960s. Oddly enough the PPD went up and my temps went down  It looks like I'll be in the 350,000 PPD range with the three clients.

It looks like I'll be in the 350,000 PPD range with the three clients.

Not all that surprising. Each GPU client imposes a load on the CPU. Two GPU's plus the CPU client should have the CPU running flat out. There's the explanation for the heat. The fact that the CPU is no longer maxed out could mean that the GPU's get services quicker and therefore produce more. I may be wrong but there is logic (and lore (BS?) that I've accumulated over the years) there.I have been trying some different configurations and I think I have it all set for now. I am running only three GPU clients on the two computers. I discontinued the CPU client in the PC with the two 960s. Oddly enough the PPD went up and my temps went downIt looks like I'll be in the 350,000 PPD range with the three clients.

To be fair, are we talking non-HT Xeon or HT Core i7 CPUs? Two identical cores are better than a single HyperThreaded core even though they appear as two cores to the OS. According to the Folding Forum, each NVIDIA GPU Slot uses a Floating Point Core. The dual E5450 Xeon CPUs in my T7400 have 8 FPUs vs. a single Core i7's 4 FPUs. So I could see how the FAH Client might configure 6 cores for the CPU Slot even though it might only have 2 FPUs available.

I'm not sure about the difference in the NVIDIA GeForce drivers for Ubuntu versus those for Windows. I know there was discussion about Maxwell GM10x cards not working as well using the GM20x drivers, but I'm not sure I see that with my rig.

On the Dell T7400 you can select which order the Graphics Cards are polled in the BIOS. You might check to see which order you have set. I'm not sure if it makes any difference when it comes time for the OS to assign device assignments.

The one other thing I found dinking around with swapping GPUs was the importance of Finishing Folding, Pausing Folding after all the WUs have finished, manually deleting the GPU slots, shutting down the FAH Client service, and deleting the Work folders & Queue DB. Then you can safely shut down and play musical chairs with your graphics cards. You do need to add the GPU Slot(s) manually after starting back up, but not clearing the previous configuration leads to issues like I have reported in previous posts.

If you don't manually delete the GPU Slot before removing a GPU the FAH Control will not connect to the FAH Client when you start back up. Then you need to shut down the FAH Client service and manually edit the CONFIG.XML file to delete the GPU Slot from the file. Fun Stuff!

Still waiting to break the 500K PPD Avg barrier. *sigh*

I'm not sure about the difference in the NVIDIA GeForce drivers for Ubuntu versus those for Windows. I know there was discussion about Maxwell GM10x cards not working as well using the GM20x drivers, but I'm not sure I see that with my rig.

On the Dell T7400 you can select which order the Graphics Cards are polled in the BIOS. You might check to see which order you have set. I'm not sure if it makes any difference when it comes time for the OS to assign device assignments.

The one other thing I found dinking around with swapping GPUs was the importance of Finishing Folding, Pausing Folding after all the WUs have finished, manually deleting the GPU slots, shutting down the FAH Client service, and deleting the Work folders & Queue DB. Then you can safely shut down and play musical chairs with your graphics cards. You do need to add the GPU Slot(s) manually after starting back up, but not clearing the previous configuration leads to issues like I have reported in previous posts.

If you don't manually delete the GPU Slot before removing a GPU the FAH Control will not connect to the FAH Client when you start back up. Then you need to shut down the FAH Client service and manually edit the CONFIG.XML file to delete the GPU Slot from the file. Fun Stuff!

Still waiting to break the 500K PPD Avg barrier. *sigh*

Sure, I post that, fully expecting the update to come through and king3pj would tick over the next milestone. Instead he appears to have pulled the plug on his production. Zippo all day.Congratulations to king3pj for passing 6,000,000 Points! (okay, maybe a little early...)

Sure, I post that, fully expecting the update to come through and king3pj would tick over the next milestone. Instead he appears to have pulled the plug on his production. Zippo all day.

That's temporary. I've got some new furniture coming and I'm rearranging my home office. My PC is currently in a closet out of the way.