Ravi Thakkar

Summary

The AR/VR market is slated to increase 30x by 2020.

All the big boys are playing with AR toys, competing to bring devices into the market.

What is Apple doing about it?

After about five years running through the USPTO, Apple (NASDAQ:AAPL) was granted a patent, Application number US15138931, named "Transparent Electronic Device," which can bring great changes in how we think about the iPhone. This is not Apple's first augmented reality or AR patent, but the one that describes the futuristic scenario most clearly. Given that IDC forecasts the AR/VR market to grow 30-fold in the next few years, from $5.2 billion today to over $160 billion in 2020, Apple's forays in AR takes on new significance. In fact, it is AR that has more useful potential, as the same report says, and as we shall soon see, Tim Cook also seems to confirm that AR is where Apple's focus lies. In this article, I will take a brief look at the relevant patent and what it might mean for a future iPhone.

Augmented reality, as we know, is adding a virtual element to reality, augmenting it visually, haptically or kinesthically. Instead of in virtual reality, where the real world disappears into an immersive, interactive, completely unreal, reality. In AR, the rest of the visual, tactile, sensory world remains the same, except for a small part, which may be an image projection, or other sensory depictions, which is not real. In terms of image, this is often a projection that can be "seen through," unlike in VR where what you might see through a VR image is also VR.

It is interesting that Tim Cook, who, following a long Apple tradition, keeps very quiet about sight-unseen futuristic developments at Apple, recently said the following about its AR work:

"AR I think is going to become really big," said Cook. "VR, I think, is not gonna be that big, compared to AR … How long will it take? AR gonna take a little while, because there's some really hard technology challenges there. But it will happen. It will happen in a big way. And we will wonder, when it does (happen), how we lived without it. Kind of how we wonder how we lived without our (smartphones) today."

In another very recent interview also, Tim Cook talks about how AR, not VR, is the core technology and how Apple is working on developing AR projects behind the scene. Add that all to the fact of the new patent coming out just around a month or two before these interviews, and one feels something interesting is developing at Apple.

What the patent # US15138931 actually says

With the caveat that often a patent doesn't sound as interesting or useful as the invention it develops, here's what the patent says about the concept:

A method and system for displaying images on a transparent display of an electronic device. The display may include one or more display screens as well as a flexible circuit for connecting the display screens with internal circuitry of the electronic device. Furthermore, the display screens may allow for overlaying of images over real world viewable objects, as well as a visible window to be present on an otherwise opaque display screen. Additionally, the display may include active and passive display screens that may be utilized based on images to be displayed.

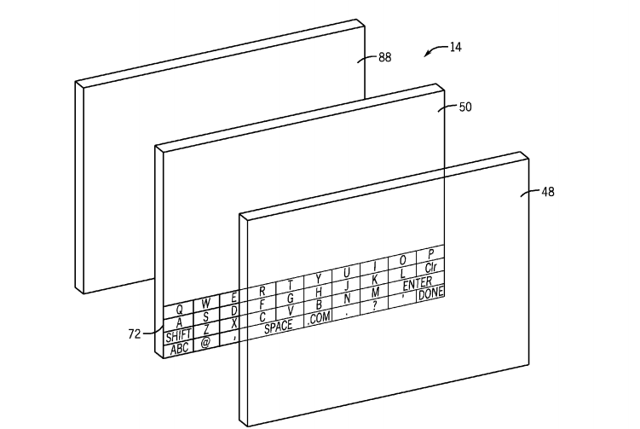

What, exactly, will this patent do to an iPhone? Well, if deployed, it may make the iPhone's screen almost transparent, with possibly a small masked, opaque portion hiding the electronic innards. The screen, which can be made transparent on demand, will let users view objects behind the iPhone, through the iPhone, making it work like an overlay image which can display information about whatever object is behind the scene. Even more interesting, in one embodiment of the technology, Apple claims that the iPhone "may include two or more of such display screens (each having respective viewing areas with transparent portions) arranged in an overlaid or back-to-back manner." This use of overlayed, multiple display screens produces the possibility of 3D holographic imagery. Apple actually goes on to discuss "sensed rotation" of the object, which can be displayed using a set of active and passively transparent display screens.

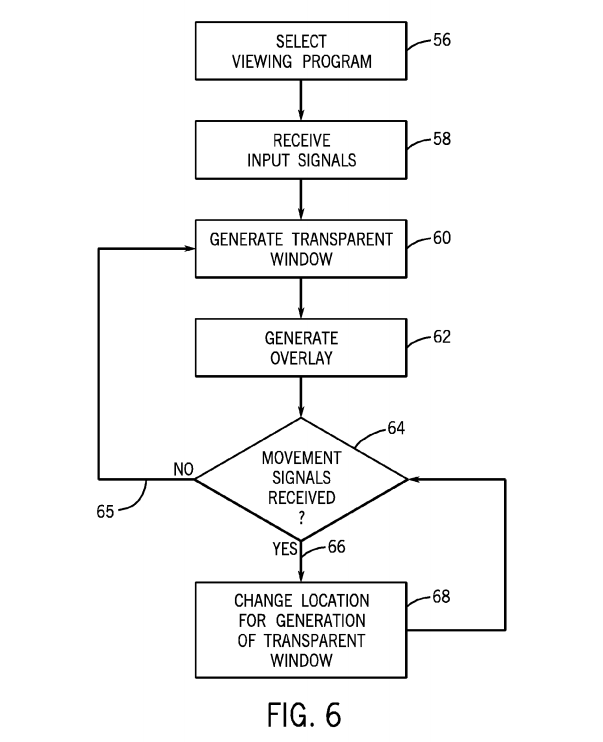

The movement and multiple transparent window concept is nicely illustrated in the following tree:

Source - USPTO

As the diagram above clearly says, the transparent window on the iPhone or other device can be user generated, on-demand. It can also represent motion very realistically.

How a future iPhone may look like

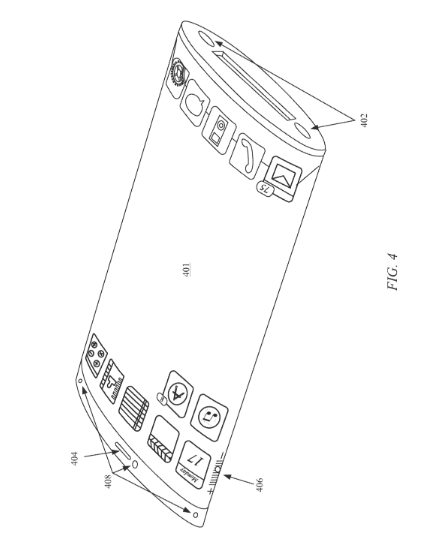

If this invention is every deployed, - and from what Tim Cook says, they are working on it - the iPhone will have its greatest makeover since it was first manufactured. The iPhone's beauty is that it already looks semi-transparent. With this application, its entire screen space, except for a small area hiding the circuitry, will become transparent on demand. It will not be 100% transparent, like glass, but more of a darker transparency, like Samsung actually demonstrated last year.

Source - Note the transparency of the slightly darkish screen

It also may take the following look if it involves multiple passive and active display screens as discussed just now.

Source - Wraparound glass display iPhone

The wraparound display can be viewed as a set of multiple displays, at least two, which have the potential to make motion imagery more vivid and lifelike.

How can this be useful

Well, besides simply the wow factor of having an iPhone turn into a Star Trek like AR device, there are multiple possible uses for this AR technology, some of which Apple itself has stated.

In one concept, this AR iPhone can be used in a setting, say, the museum, where the "overlays virtually interact with real-world objects." So, in a museum setting, an exhibit like a painting may have an overlay on one display screen, while information about the painting - taken from technology present in the museum, or even stored in the phone or from the Internet - could be displayed in the second screen. The same thing could be possible outside a closed setting. You could look at a scenic view of the Alps, for example, through your iPhone's transparent screen, and if you zoom in, you could see information about the height of a peak, or a climbing route, or a trekking route, displayed right across the screen overlaying the background. No, I am not writing science fiction - the Apple patent application says most of these things.

It is also possible to generate a transparent window with a tap or a double tap. From the language of the patent: "This tap or double tap input by a user may cause the processor(s) 24 to change the voltage driven to an area of the display screen 50 corresponding to the location of the tap or double tap by the user such that the pixels in the area are driven to voltages that cause the area to be transparent." So, the rest of the screen will be opaque, but right next to, say, a set of information displayed on the opaque screen, about an object outside, you could create a transparent window to actually see that outside object. This isn't just uber cool, this could be a very useful feature in a number of settings where more realistic visualization of information is necessary for a user to understand something more clearly. Like surgery, for example - someday a surgeon may tap his iPhone in a surgical setting to figure out what that thing is doing inside your stomach, or whatever.

Another application could be 3D display. As inventor Aleksander Pence says, the iPhone may include multiple rear facing cameras, and images captured from them can be put together in the form of a three dimensional, real time overlay of what's behind the phone.

When will it come to the market

Apple has been quietly growing a lot of assets in the AR/VR technology arena. The company has been buying into a number of AR/VR technology companies, and hiring key personnel with expertise in the area, for a number of years now. It hired Microsoft's Nick Thompson, who has worked on HoloLens, and Bennett Wilburn, who has also worked in machine learning. Recently, Apple hired Doug Bowman, who is developing pioneering technology in 3D user interfacing. Zeyu Li, who has worked at AR startup Magic Leap, which is developing extremely cool new technology in AR, has also been hired.

Besides personnel hires, Apple is also acquiring key assets in AR/VR, like when in 2013 it purchased PrimeSense for $345 million. PrimeSense was the original developer of the technology behind Xbox Kinect, which could be controlled using voice and gestures. In May 2015, Apple acquired Metaio, a German company that has produced cool projects in AR.

All theses hires and purchases tell me that Apple is working fast to produce something marketable in the very near future. This year is already gone, and next year's product launches are probably already lined up, but by 2018, I expect the first workable i-device based on AR to come to the market. It may not immediately be bundled on the iPhone - the iPhone launch is Apple's most important event, and AR is still an iffy market. However, one would expect slow integration of the technology with the iPhone if it hits it off with initial customers.