HD Picture Quality

- Thread starter redelephants

- Start date

- Latest activity Latest activity:

- Replies 256

- Views 25K

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Zohan did itHave no idea can't chk, Burning off stuff to DVD to send to my daughter for X-mas. BTW underline didn't seem to work.

Well, the next movie looks a little better. But is still lacking detail. Maybe this is one of the channels running at 3 Mb.

You people need to stop. If someone from Dish reads this they'll think their picture is good enough. Far from it if you ask me, considering I still see differences between OTA and Dish on my locals.

I noticed this over a year ago and when I confronted DISH, they admitted that there are software issues that were being tended to. That may be part of the increase in PQ. They are getting things fixed.

But I had a Field Supervisor at my place a couple of weeks ago and we spent a couple of hours doing direct comparisons of what he said they have heard about, but no one had been able to effectively see in comparison in the field. There were several movies on HBO that I have on BD and DVD and OTA vs DISH Locals where we were able to easily switch back and forth and he said he could see a difference that should not be there. He was very grateful and thanked me repeatedly for allowing him to see this. Maybe seeing is believing?

Unfortunately, the real reason that he was here has not been resolved. Maybe soon?

We'll, just compared Dish vs OTA again today during NFL games. Obvious difference, to me at least, between the too. Better color, sharper picture, less artifacts using OTA.

That is interesting as my signal strength for Cinemax (129 tp27) is 55. Wouldn't the impact on quality due to FEC be consistent across channels with a similar signal strength? My SpikeHD (129 tp26) has a signal strength of 55 as well, yet the quality of the image is quite different. I would expect that the digital OTA signals also include some type of forward error correction.

Remember that there are so many factors that we are dealing with including the difference in quality from the original source. Your FEC is doing equally the best it can at 55 for both streams but the final result is still dependent on other factors. A signal quality of 55 doesn't give us a determination of which bits of data are corrupted or how they will manifest in the picture.

Another thing we have to consider is that it takes more signal (approximately 6 times the amount per second) to render an HD picture than an SD. Even comparing SD to SD or HD to HD, the complexity of the scene and the amount of motion (fast vs. slow) both factor into determining the final quality of the two streams at similar signal readings.

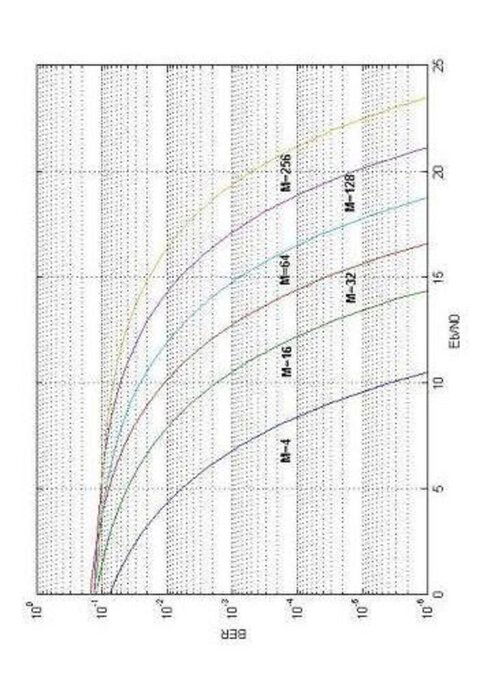

I have included a graph to illustrate. I have rotated the graph so that it would represent increase when read from left-to-right. You might imagine your signal meter bar across the bottom.

The curves on the graph each represent bit streams of differing complexity. The M4 curve represents the simplest SD stream while the M256 represents the most complex. The scale on the right labeled Eb/No is equivalent to SNR. What we need to see here is that for Eb/No of 10, the simple bit stream (M4) we are getting a BER of 10-6, which is good quality. But at the same Eb/No, the complex bit stream is only at BER of 10-1, which is just beyond "signal lock". So while your signal quality reading is 55 for two different feeds, the resulting BER and PQ will still differ.

Before Dish Network supposedly "improved" the signal meter (leveling the signals across all receivers), this was easier to see. A simple SD receiver (311 for example) would give readings over 100 on both 110 and 119 when the dish was peaked. But when an HD receiver was installed on the same system, then signal readings were mid 80's (in STL).

At the time I didn't understand why the HD receivers produced such a low reading, but I used this information to double check alignment. I would check the signal meter on a simpler receiver to make sure the dish was properly pointed. If the signal meter on a 311 that was on an HD dish was as good as on the Dish 500, I was confident (despite lower HD receiver readings) that alignment was good.

Since the signal meter "improvement", they have caused all receivers to mimic the readings of the HD receivers and have decreased the precision of the meter. According to Dish, this was changed to reduce confusion over the variation in meter readings. What they actually did was to remove the "known" and "familiar" readings because of the increase in signal strength complaints and the upcoming roll out of the under-performing Eastern Arc dish.

And, yes, OTA follows the same parameters and uses the same technology. The reason that OTA PQ is better than Dish (only slightly all things maximized) is that with the current size of the dishes in use, you can't even reach the point of too much signal (that causes overload and signal drop out) as you can with OTA. And with the "as long as you have lock" mentality and training provided by Dish, most people are viewing a "portion" of the quality that the system can provide versus when the dish is accurately peaked for maximum signal across all satellites.

The hope of Dish Network is that continued improvement in coding efficiency will compensate for the dishes being too small and the installers not being trained correctly. In the mean time, the signal meter change has kept the complaints and the understanding of signal effects to a minimum, leading us to believe that what you see is what you get.

My hope too, is that better coding will bring better results. Certainly, Dish has made no effort to alter training or to increase the size of the dish. And installers can still tell you, with clean conscience, that signal doesn't matter - because that is what they've been taught.

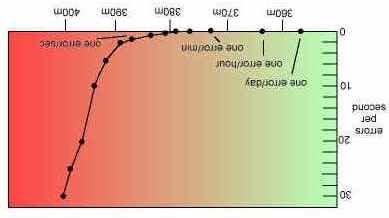

I am including another graph that represents Errors/Time. It has a red to green scale similar to what you see on your signal meter. I have oriented this graph to again represent the same aspect as your signal meter - increasing quality as you read to the right. You will notice that the curve represented is the same as other BER performance curves, with one exception. Where the errors per second "leave" the scale of the graph, they "rocket" skyward. This graph is pretty much identical to the graph of BER vs MER.

Attachments

Jeff, you keep churning out all this info but we have never seen real world examples. How about some screen caps of some programs at various signal strengths, Dish or OTA.

One thing I am happy about lately is the movie channels seem to be running at higher bitrates than the normal HD channels. Look nearly on par with my OTA locals.

There are two factors that determine bit rate. The first is what is sent from Dish. The second is how well it is received by your system. It is not just how well your dish is peaked, but also how well your system is installed. Poor connections can cause "slow data" or bitrate, as well as low signal strength as it relates to signal quality.

In the "old days" signal surplus, the greater amount of signal produced a higher quality signal (higher SNR - lower BER) that rarely allowed us to see any picture irregularities. (i.e. a 311 with signal in the 100's) The fundamental relationship of signal power to noise power was well in favor of high quality.

Currently we are in a signal famine regarding HD. Due to dramatically increased noise from added components in receivers, added receivers in systems and greater demands for HD, we have to make the best use of what we have by maximizing signal strength at the dish, and reducing noise by attention to detail in wiring, connections, and grounding.

Jeff, you keep churning out all this info but we have never seen real world examples. How about some screen caps of some programs at various signal strengths, Dish or OTA.

Aren't you guys discussing the real world examples? Haven't you seen the pictures posted by others?

I'm just happy that after 3 years there is actually discussion about picture quality! No one would even admit that digital picture could vary 3 years ago. Having seen and corrected the deficiencies in PQ, I've spent these last three years researching the subject and have found that the science does accurately explain the visual deficiencies that can be witnessed on screen.

Now that we're moving past the denial phase, we can get on to the answers.

Here's some pictures from the government group studying the same problems.

Video Quality Research Home Page

That's because it is not signal strength dependent. Don't read too much into his post, must of us ignore it.

agreed

Jeff just spews the same thing over and over

Aren't you guys discussing the real world examples? Haven't you seen the pictures posted by others?

I'm just happy that after 3 years there is actually discussion about picture quality! No one would even admit that digital picture could vary 3 years ago. Having seen and corrected the deficiencies in PQ, I've spent these last three years researching the subject and have found that the science does accurately explain the visual deficiencies that can be witnessed on screen.

Now that we're moving past the denial phase, we can get on to the answers.

Here's some pictures from the government group studying the same problems.

Video Quality Research Home Page

Everyone's TVs, viewing distance and eyes are different. I want your screen caps from two different signal levels so I can see the difference because I don't believe anything you say. You are the only on on this forum that believes this, so to make us believers you need to show evidence. To date you have shown none.

The link you provided is strictly images or various compression artifacts and has nothing to do with them being caused by signal strength....just overcompression.

In the "old days" signal surplus, the greater amount of signal produced a higher quality signal (higher SNR - lower BER) that rarely allowed us to see any picture irregularities. (i.e. a 311 with signal in the 100's) The fundamental relationship of signal power to noise power was well in favor of high quality.

Oh Jesus H.....STOP!

You keep spewing the same thing over and over thinking the sh*t will stick to the wall. When you have no clue what you're talking about...The reason for higher signal is rain fade. Why is it folks who live within a spot beam have a signal of 55-60 and folks on the edge have a 35 signal yet they get the same picture? Why do you think Dish "neutered" the meter? To keep techs from slapping up a dish, getting a 100+ signal without trying and saying "yep looks good".

The one day for fun I took a DBS dish and my 811 with the old meter and was working on something. Aimed the dish without trying and boom 100+ on meter. After the software download it was around 48-50 yet the picture looked the same.

Why do you think I installed a 36" dish at 61.5 for a member here? Sure as hell to not have them say "yay! I have a 78 on a few transponders". He wanted it for rain fade issues. He didnt like his signal going out with dark clouds.

I do enough aiming of my C-Band, KU Band and DBS dishes to know what I need to keep a stable signal. The FEC does matter what the MINIMUM signal is. In the FTA world there use to be a channel called "White Springs TV"...it had a FEC of 1/2. Folks in the FTA area were able to keep the picture stable with a 10 quality signal..normally its 30-32.

Last week I watched a football game in uncompressed HD (from the source)...signal quality was low (22-25) yet the picture was stable as could be. Picture looked damn good.

My Shaw Direct system I had a primestar dish set up for HD and got results of +6.0 to +7.2 for HD...+3.0 is minimum. I had a 1.2m dish laying around and set that up and now I have numbers in the +9.0 to +10.6 area. But guess what? PICTURE LOOKS IDENTICAL!!

So lets quit spewing the same thing about "oh your signal needs to be higher"

BINGO! We have a winnerThe link you provided is strictly images or various compression artifacts and has nothing to do with them being caused by signal strength....just overcompression.

Oh Jesus H.....STOP!

You keep spewing the same thing over and over thinking the sh*t will stick to the wall. When you have no clue what you're talking about...The reason for higher signal is rain fade. Why is it folks who live within a spot beam have a signal of 55-60 and folks on the edge have a 35 signal yet they get the same picture? Why do you think Dish "neutered" the meter? To keep techs from slapping up a dish, getting a 100+ signal without trying and saying "yep looks good".

The one day for fun I took a DBS dish and my 811 with the old meter and was working on something. Aimed the dish without trying and boom 100+ on meter. After the software download it was around 48-50 yet the picture looked the same.

Why do you think I installed a 36" dish at 61.5 for a member here? Sure as hell to not have them say "yay! I have a 78 on a few transponders". He wanted it for rain fade issues. He didnt like his signal going out with dark clouds.

I do enough aiming of my C-Band, KU Band and DBS dishes to know what I need to keep a stable signal. The FEC does matter what the MINIMUM signal is. In the FTA world there use to be a channel called "White Springs TV"...it had a FEC of 1/2. Folks in the FTA area were able to keep the picture stable with a 10 quality signal..normally its 30-32.

Last week I watched a football game in uncompressed HD (from the source)...signal quality was low (22-25) yet the picture was stable as could be. Picture looked damn good.

My Shaw Direct system I had a primestar dish set up for HD and got results of +6.0 to +7.2 for HD...+3.0 is minimum. I had a 1.2m dish laying around and set that up and now I have numbers in the +9.0 to +10.6 area. But guess what? PICTURE LOOKS IDENTICAL!!

So lets quit spewing the same thing about "oh your signal needs to be higher"

I'm not posting for you. I am posting for the ones who ARE capable of seeing the differences and are searching for answers.

The Lord as my witness, everything I have posted is scientifically sound, accurate, and truthful.

I pray that your comments don't continue to rob more people of getting the high quality picture they deserve.

It's my understanding (and just about everyone else's) that if the signal quality drops below the level at which the forward error correction scheme can reconstitute 100% of the original bits, the result won't be mosquitos, grain, or whatever other name one gives to the results of overcompression. The result will be an obvious break in the picture and/or sound. The bits in flight don't know which ones represent high-frequency or low-frequency detail. That's determined by the compression system before they're transmitted. Are you seriously trying to argue otherwise? Because that's what it sounds like, and it's ludicrous.

exactly Jim...and that is what I gave as examples in post 134 (right above yours). The signal gets too low and below the threshold of the FEC you get breaks in picture/pixeling.

I've worked with enough setups to know that a low signal will give you breakups of picture. The FEC does matter. A 7/8 FEC you need strong signal quality (in the FTA world) verusu a 3/4 FEC or a 1/2 FEC but the threshold of it will show pixeling, not blurriness in picture.

Many times I log a sports backhaul that is at threshold and I get freezing and dropouts...not a blurry picture. That is due to overcompression. See enough of that on some FTA transponders

Jim you have a FTA system so you know what I'm talking about

I've worked with enough setups to know that a low signal will give you breakups of picture. The FEC does matter. A 7/8 FEC you need strong signal quality (in the FTA world) verusu a 3/4 FEC or a 1/2 FEC but the threshold of it will show pixeling, not blurriness in picture.

Many times I log a sports backhaul that is at threshold and I get freezing and dropouts...not a blurry picture. That is due to overcompression. See enough of that on some FTA transponders

Jim you have a FTA system so you know what I'm talking about

3 dishes

I have 3 dishes and my lowest signals are in the 50's and the high ones are in the 70's. I rarely have rain fade but I don't see a better picture than when I had lower signal levels. I've been able to lock & hold a good picture with levels as low as 17. Jeff I have stated that before. I'm not saying that is the level one should have but anything above about 30 (on low signal tps) is for rain fade. Man reading all of your stuff are hours of my life I won't get back.

I have 3 dishes and my lowest signals are in the 50's and the high ones are in the 70's. I rarely have rain fade but I don't see a better picture than when I had lower signal levels. I've been able to lock & hold a good picture with levels as low as 17. Jeff I have stated that before. I'm not saying that is the level one should have but anything above about 30 (on low signal tps) is for rain fade. Man reading all of your stuff are hours of my life I won't get back.

Everyone's TVs, viewing distance and eyes are different. I want your screen caps from two different signal levels so I can see the difference because I don't believe anything you say. You are the only on on this forum that believes this, so to make us believers you need to show evidence. To date you have shown none.

The link you provided is strictly images or various compression artifacts and has nothing to do with them being caused by signal strength....just overcompression.

The viewing distance thing is b.s. An image that requires 10Mbit/s to look good at 1 foot from the screen will look good when at 10Mbit/s at 1 foot from the screen or 12 feet from the screen. Try and run the image at 5Mbit/s and it will look bad at 1 foot, and perhaps workable at 12feet. That doesn't mean that everyone should be at 12feet from the screen. That means that dish either needs to run the show at 10Mbit/s or needs to enhance their encoding to allow the 10Mbit/s show look good from 1 foot at 5Mbit/s.

Bringing viewing distance into it the discussion of HD picture quality on a HD television is inappropriate. Where viewing distance can play a role is when watching SD programs on a HD television. SD programs do not have the necessary amount of data in them to avoid artifacts on a HD television. Some televisions use techniques (upconverting) to guess at missing information to make a better looking SD picture. Other TV's don't. Depending on how good your TV is at doing that plays a role in how far away you should be to see the best image.